Cyber Exposure

Machine Learning Could Make Hackers Practically Unstoppable: Are You Ready?

The idea of artificial intelligence has fueled both fascination and fear ever since HAL 9000 refused to open the pod bay doors in 1968. But 50 years later, AI bears only minimal resemblance to Arthur C. Clarke and Stanley Kubrik’s dark vision.

AI in the real world is providing vital clinical support for doctors, allowing retailers to offer curated shopping experiences and driving efficiencies and performance across a broad range of industries.

Unfortunately, the cyber-crime industry is no exception.

A 2014 study from McAfee estimated the global cost of cyber crime at $445 billion. The firm’s most recent analysis places that figure at an astounding $600 billion — about 0.8 percent of global GDP.

The most significant change within that time span is the explosion of AI and machine learning, thanks to the wide availability of GPUs that make processing power faster and cheaper; the proliferation of inexpensive sensor technology and wireless connectivity; and the birth of the cloud, providing virtually unlimited storage capacity for the vast amount of data being collected every day.

The terms AI and machine learning are often used interchangeably, but they’re not the same. AI refers to all machines that can perform tasks characteristic to human intelligence. Basic AI applications rely on code with rules and decision-trees. “If X, then perform Y.” Consider a spam filter. It can be programmed to delete or divert all emails from specific addresses, just as a human would otherwise do manually.

Machine learning is a facet of AI. Machine learning trains an algorithm so that it can learn, by feeding huge amounts of data to the algorithm and allowing the algorithm to adjust itself and improve.

Deep learning, more or less, is machine learning on steroids. Deep learning can solve complex problems with brain-like neural networks that act in layers. Each layer is trained on learning a specific feature and all of the features are connected, creating a self-learning entity with immense depth.

A Wide Range of Threats

The phenomenal power and versatility of machine learning and deep learning make them ideal tools for the cyber security arsenal. Self-teaching AI allows companies to detect vulnerabilities or anomalous behavior and act independently to identify and block attacks.

“It’s really a behavioral science. Artificial intelligence is enhancing your ability to compute that behavioral science with an enormous amount of metadata,” said Shiraz Saeed, national practice leader for cyber risk, Starr Companies. “Ultimately we’re all looking for the same thing — pattern recognition.”

When you build a better mousetrap, though, the mice get craftier too. As quickly as security experts can leverage machine learning to identify anomalies and create dynamic defenses, criminals can use the same tools to map networks, find targets, identify weak spots, launch custom attacks and improve their ability to hide from sophisticated detection programs.

The range of criminal applications for machine learning is expansive. “AI is a tool, and the uses to which it can be put are limited only really by the imagination of the user,” said Tim Zeilman, vice president and cyber coverage expert with Hartford Steam Boiler.

“It can be it can be a little bit staggering that in some cases the CISO might only have visibility on maybe 60 percent of their extended ecosystem, meaning there’s 40 percent floating out there potentially wildly exposed, and they don’t even know about it, let alone actively trying to protect it.” — Kevin Richards, global head of cyber risk consulting, Marsh

Security experts expect criminals to replace botnets with intelligent clusters of compromised devices called hivenets to create more effective attack vectors. Hivenets will leverage self-learning to effectively target vulnerable systems at an unprecedented scale, communicating and acting off of shared information. Hivenets will be able to expand into swarms, identifying and targeting different attack vectors simultaneously while slowing down mitigation and response.

“It makes it much more difficult to combat the attack due to the speed and scale,” said Tim Marlin, senior managing director and head of cyber and professional liability, The Hartford.

“A self-learning hivenet … will often act to move to a different attack vector once their initial vector has been cut off.”

In the area of ransomware, already a significant threat, next-level attacks could involve cloud service providers and other commercial services. With the aid of machine learning, multi-vector attacks could scan for, detect and exploit weaknesses in a cloud provider’s system, simultaneously disrupting service for hundreds or thousands of businesses and millions of their customers.

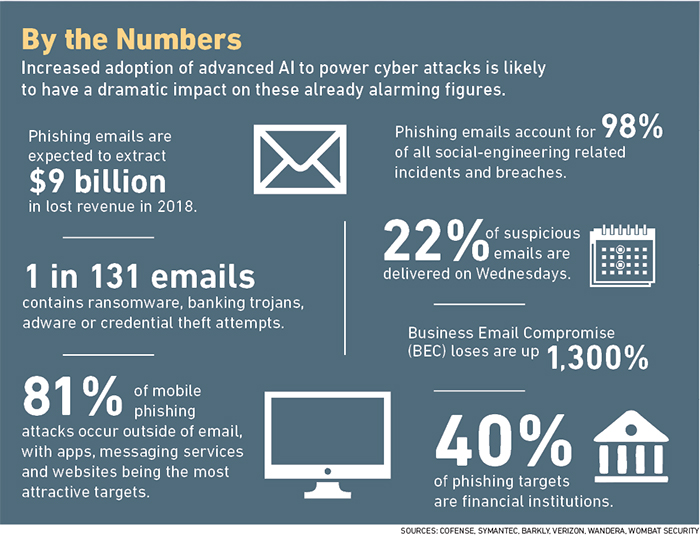

Machine learning tactics are also helping criminals perfect the sinister arts of phishing and spearfishing. A recent Trend Micro report indicates that phishing attacks are expected to net $9 billion in 2018. That figure will only keep rising — because it works. A study from security firm PhishMe found that 91 percent of cyber attacks start with a user clicking on a phishing email.

A growing number of companies are training their people to recognize phishing attempts, but advanced AI is making defense trickier, said Kevin Richards, global head of cyber risk consulting, Marsh, as AI can learn end-users’ responses to different tactics and adjust accordingly.

A target being heavily spammed might mark the emails as junk or try other tactics to stop the influx. But “the learning engine is changing the attack ‘look and feel’ just enough that it bypasses the security controls,” he said. At some point the user will click unsubscribe to make it go away. “That’s when the malware actually gets delivered,” said Richards. “And it’s kind of insidious, because you’re trying to do the right things.”

Spearphishing — or phishing for specific credentials or data — is highly lucrative, so attackers are willing to put in the time to gather what they need, compiling publicly available information and social media data in search of clues on how to approach each target with believable but fraudulent emails.

Assisted by machine learning algorithms however, bad actors can get to their end game in a fraction of the time.

“Artificial intelligence can help process massive amounts of data and put together connections and build profiles of people that can be used for social engineering,” said Zeilman. “With AI you can [compile data] on a massive scale and very quickly … and create things that are way more comprehensive.”

Deep learning can also process communication and behavior patterns to make social engineering attacks seem so authentic that virtually no one would suspect foul play.

Recent reports on deepfake video techniques add a new level of alarm. It’s possible to envision a scenario where criminals could fake a Skype call with a CFO, instructing subordinates to wire money to a specific account. Even employees sufficiently trained on security procedures might consider bypassing those procedures when presented with “face-to-face” orders from the boss.

Increased ease of gathering credentials is also contributing to a booming crime-as-a-service industry on the Dark Web. Cyber criminals are building databases full of dossiers of high-value individual targets and their credentials. A “phisher” can now be someone who doesn’t even have to know how to code. He or she can just buy credentials on the Dark Web almost as easily as ordering from Amazon.

Machine learning is boosting the cyber-crime economy in other ways, including services that allow developers to upload attack code and malware to analyze how security tools from different vendors are able to detect it.

White Hats vs. Black Hats

So the good news is that the “good guys” are actively using advanced AI technologies to detect and defend against cyber incursions. The bad news is that the “bad guys” are working just as hard.

“I think that the good guys had a head start. So that’s worth something,” said Zeilman. “But I’m not sure how long that’s going to last.

“A lot of times, the real question is the motivation — what motivates the bad guys to use this new technology? And the answer to that is [usually] money,” he said.

“Cyber criminals tend to be lazy, so if they can get away without using AI and still make tons of money, there’s not a lot of incentive for them to move into that field. But if the defenders start using AI and it becomes difficult for the bad guys to keep up, then there’s a lot of motivation for them to get ahead of the defenders, keep up with their business model and continue to make lots of money,” Zeilman said.

“Innovation, unfortunately, can happen faster on the bad actors’ side, because they don’t really have things like quarterly earnings reports or outage windows for maintenance of the data center,” added Richards.

“They don’t worry about those things. They’re worrying about improving the yield of their attacks. If these tools can help them be more efficient and more effective, they’re going to continue their investment in making those tools more robust.”

In addition, what counts as “winning” looks very different from either side, experts noted.

“As the defender, you’ve got to find all the holes and plug them up. As the attacker, you’ve just got to find one hole and use it,” said Marlin. “That’s always the issue here when you’re playing on the defensive side. [The attackers] only need a small hit ratio in order to [succeed].”

The deeper concern is where the lines will eventually be drawn — and if they’ll be drawn at all, said Zeilman.

“The good guys [are working on AI] in established labs, and they do so in tightly controlled situations, with an eye towards the moral and ethical consequences of AI,” he said. “I think once you start getting people in the criminal sphere delving deeply into AI, they’re not necessarily going to have those kinds of moral and ethical considerations in mind. So we’re [perhaps] letting the genie out of the bottle.”

Cyber Security Is Only Part of the Solution

In terms of cyber coverage, a cyber attack powered by advanced AI isn’t going to change the way a policy responds. But as the scale of cyber-crime balloons, risk managers should consider whether their insurance coverage is aligned with their risk exposure, said Joshua Motta, CEO of Coalition, a new firm that provides comprehensive cyber insurance coverage, cyber security, and risk management services.

“Criminals can now operate at unprecedented scale and efficiency, and are using technology to their advantage to cause economic harm,” said Motta.

It’s time, he said, for a shift in thinking. While it’s true that many cyber attacks cannot be prevented, the losses from cyber attacks can be.

“I chose to focus on risk transfer because despite a company’s best efforts, there is always the latent risk of a cyber attack. Companies that are making best efforts at cyber security, but do nothing when it comes to transfer, have an incomplete solution.

“In reality you need to be doing all these things. You need to be able to prevent incidents from happening. You need a plan for how to mitigate them when they do happen. And you need a mechanism in place to help you recover and to help pay for any unforeseen costs you may incur following an incident.

“Only when you accomplish those things as a company are you prepared for the worst that could happen.”

The Hartford’s Marlin echoed Motta’s point. Given the constantly changing threat environment, he said, insureds need to be including resilience in the way they think about their cyber programs and cyber response. The Hartford’s CyberChoice First Response includes “Post Incident Remediation Expense” coverage, which is an additional limit to be able to improve their systems, even in ways unrelated to the claim itself.

“We are committed to being partners with our insureds, and we do want them to be better than they were even before the claim,” said Marlin.

Small to midsized businesses are the least likely to be prepared for an attack, said Motta, largely because they don’t understand why criminals would bother to attack them.

“There are targets of choice and targets of opportunity,” he said. Targets of choice — large companies like JP Morgan or Equifax — are targeted in many cases for who they are. Targets of opportunity, however, often fall into the crosshairs because of a misstep in how they’ve managed or configured their IT infrastructure.

“Cyber criminals are scanning for ‘nails’ that stick out above the surface, such as obvious vulnerabilities in a company’s IT infrastructure. Once they find one, they use their ‘hammer’ in the form of an exploit to target or take a swing at the company and their vulnerable systems.”

Smaller businesses in particular, said Motta, can benefit from partners who see the nails sticking out and show them what they can do about it.

Understand the Size of Your Footprint

As the cyber-crime industry continues to blossom, risk managers need to be looking at the broader picture of risk and considering whether they have peripheral exposures.

“As companies go more digital in their business processes, the problem extends beyond corporate IT, said Richards. “So this is becoming less about protecting my private data center and more about how my digital footprint has expanded across suppliers, across the cloud, across mobile, across social media, and they need to bring all of this into account.”

The “extended ecosystem,” said Richards, includes every digital relationship where something important to the company is being shared with third parties.

“Maybe I’ve outsourced part of a business process like manufacturing or distribution. They have critical intellectual property; now my cyber security program has to include them in my strategies. Risk managers need to ensure that their net covers all of that.”

Far too often that net falls short, said Richards. “It can be a little bit staggering that in some cases the CISO might only have visibility on maybe 60 percent of their extended ecosystem, meaning there’s 40 percent floating out there potentially wildly exposed, and they don’t even know about it, let alone actively trying to protect it.”

Insurers are also actively considering their own footprints, experts said, and looking at the potential for aggregation exposure.

“There are some worrying implications for risk accumulation, or the likelihood that a single attack could trigger losses across a larger number of companies,” said Motta.

“Frankly that’s the biggest challenge for the insurance industry, no matter what the class of insurance [but it’s a] particular challenge with cyber.

“Accumulation risk is more difficult to price because you’re really talking about pricing catastrophic risk as opposed to pricing the everyday probability that an individual company is going to get hacked.”

The post-AI cyber landscape could force a profound shift in the way underwriters approach cyber risks, said Zeilman.

“Every now and then a technological change essentially renders your past history certainly less relevant — perhaps completely irrelevant,” he said.

“All this data that we have at hand tells us to some extent how these risks are going to behave. It might have to be thrown out the window, because [the future brings] a significant technological shift that will cause cyber criminals to act very differently, and will cause harm or loss experience to change in unpredictable ways.” &